What is the Value of a Wicket in T20 Cricket?

For almost all of the sport’s history, being a good batting team in cricket has been all about one thing: not losing wickets. When time is not a limiting factor, scoring runs quickly is not of huge importance. If you bat for long enough, the runs will come naturally. T20 cricket, however, changed all of that.

In T20 cricket, both teams have just 20 overs (120 balls) to bat. Time becomes a huge limiting factor and changes the calculation for the tradeoff between runs scored and wickets lost. Scoring runs, and scoring them quickly, becomes paramount and losing wickets is not as big of a problem. While teams initially approached T20 in a similar way to 40/50 over games (preserving wickets before exploding at the end), in recent years the format has matured and tactics evolved. A key realisation has been that losing wickets does not matter as much as scoring quickly.

In this year’s Indian Premier League (IPL), the world’s premier T20 league, we saw the clearest indication of teams understanding the low value of losing wickets. Ravichandran Ashwin, playing for Rajasthan Royals, was intentionally retired out. A batter retiring is something that usually only occurs when they are injured; after all, why on earth would you voluntarily lose a wicket? But in T20 cricket, the situation is different. Ashwin, a good batter but not adept at scoring very quickly, was retired out near the end of the innings to allow Riyan Parag, a much faster-scoring batter, to take his place. Ashwin had scored 28 off 23 balls, while Parag came in and scored 8 runs off 4 balls. Based on his rate of scoring, if Ashwin had faced those 4 balls he would’ve been expected to score just 5 runs. The difference between Ashwin’s expected score and Parag’s actual score from those 4 balls? 3 runs, the exact amount by which Rajasthan won the game. This was the ultimate example of the belief that wickets in T20 cricket don’t matter all that much.

We might know that wickets aren’t as valuable in T20 cricket as in test or one-day cricket, but just how much does losing a wicket impact a team’s eventual score? Can it really be true that losing a wicket has little-to-no impact? This article is all about exploring those questions and attempting to quantify the value of wickets in T20 cricket.

Method

To investigate the value of lost wickets in T20s, I needed data on the number of runs and number of wickets lost in T20 innings, as well as the time in the innings at which teams lost those wickets. To do so, I used web scraping to download scoring information from every game in IPL history dating back to the league’s inception in 2008. For each game, I had the total number of runs scored, total number of wickets lost, and the ball of the innings at which each wicket was lost (e.g. first wicket lost on the 4th ball of the 2nd over). After removing games that were cut short by rain, I had a dataset with 894 matches over 14 years.

I am only using information from the first innings of matches when the first team bats. When a team is batting second, the dynamics of their innings changes; they are not just batting against time but also to reach a target, meaning the impact that wickets have on their scoring will be changed depending on the score they are trying to reach. For these reasons, this piece focuses only on teams batting first.

With the dataset created, I used regressions to analyse the impact of losing wickets on the number of runs that a team scores. With such a large number of matches, we have a good spread of different teams and different venues, meaning that any differences in team strategy or conditions will be smoothed out across the whole data.

First Look at the Numbers

Just investigating the summary of the data, there does appear to be some relationship between wickets lost and runs scored in T20 cricket. In general, the more wickets a team loses, the less runs they score. There is a lot of variation in scores and wickets lost across so many matches, but the general trend is clear. The graph below shows a scatter plot of runs scored and wickets lost in all IPL games, with a line-of-best-fit showing the general trend between the two.

The graph indicates that, on average, losing more wickets does impact a team’s score, although it is not a certainty. To get an estimate for the actual number of the impact of a lost wicket on runs, I ran a basic regression with wickets lost on runs scored. The results are shown in the image below.

The intercept row shows the number of runs that a team would be expected to score if they lost zero wickets, 211 runs (to the nearest whole run). The number that we’re interested in is the total_wkts row: -7.9988. This coefficient is the impact of losing one additional wicket on the expected number of runs scored in the innings. So, this estimates that for every one additional wicket that a team loses, their expected runs total will decrease by 8 runs.

Not all Created Equal

Finding that losing a wicket is worth approximately 8 runs is a good start. But this most basic model assumes that every wicket is worth the same amount and happens at the same time, which obviously is not the case. A wicket from the first ball of the innings is clearly different to a wicket from the final ball of the innings. Likewise, losing one of your opening batters is completely different to losing your number 10 (one of the worst batters, on paper). Quite simply, not all wickets are created equal.

So, I looked more deeply into wickets lost and the impact on runs scored by piecing together how the timing of a wicket changes the impact that it will have on runs. To do so, I totalled the number of wickets lost in each over of every IPL match. Using a new regression model, we can estimate the impact of a wicket in each over of a T20 game to see how the timing of a wicket changes things. I expected that wickets early on in the match, particularly in the powerplay (first 6 overs), would be most impactful, with the value of wickets decreasing as you near the end of the innings. The impact of a wicket in each over of a T20 match is shown in the graph below. (Note that the impact of losing wickets is scoring fewer runs, so the higher the bar, the more losing a wicket will decrease a team’s total score).

The graph shows that the expected trend does largely come true: wickets are most valuable in the first 6 overs, being worth around a 15 run decrease. A wicket is then worth around 12-13 runs in the middle overs (7-13), before significantly tapering off from over 14 until the end. The final over of the innings shows a serious drop in the value of a wicket, being worth than less than a run. This makes sense because teams will not be holding back by that stage of the innings even if they lose a wicket, and there is not any time left in the innings for a score to be hugely impacted by a wicket being lost.

Another striking result from this over-by-over analysis is how different these results are to the first regression that did not account for the timing of a wicket. The initial estimate was that a wicket was worth around 8 runs. We can now estimate that losing a wicket actually costs significantly more than that until you get to the 14th over, at which point wickets are worth far less than 8 runs. Because so many wickets fall at the death as teams try to attack, the lower impact of wickets at the death skewed the overall number to 8, even though losing a wicket early in the innings costs roughly double that many runs. By digging into over-by-over stats, it’s possible to see that wickets are actually more valuable than first thought.

So Many Wickets, So Little Time

So far we have looked at the impact of losing wickets and the differing impact of losing wickets at different stages of the innings. Until now, though, we have not looked at the spacing of wickets falling and how that impacts runs scored. The previous section estimated the impact of, say, losing a wicket in the 13th or 14th over but it did not estimate the impact of losing a wicket in both the 13th and 14th over. The spacing of wickets is important because wickets falling in clusters, e.g. in the same over or consecutive overs, may have multiplier effects compared to losing two wickets 5 overs apart.

To begin digging into the spacing of wickets, I looked at the number of wickets lost in the powerplay of each innings. Losing three wickets in the powerplay is famously associated with a much higher chance of losing the match because wickets not only dismiss good batters but also limit a team’s ability to attack for the remainder of the innings. Looking at powerplay wickets, I expected to see strong multiplier effects: the the difference between losing 0 and 1 wicket being much smaller than the difference between losing 2 and 3 wickets, because each additional wicket more seriously limits a team’s batting resources. Below is a graph showing the number of wickets lost in the powerplay and the impact that losing those wickets have on the expected runs total.

The graph shows that losing one wicket in the powerplay is worth around 5 runs, with a big jump to losing 2 wickets being worth 15 runs. The gap between losing two and three wickets is much smaller, with losing three wickets in the powerplay associated with a 21 run decrease (just 6 runs more than losing two wickets). The multiplier effect is seen when going from losing 3 to 4 wickets; losing a fourth wicket in the powerplay is associated with a 37 run decrease, a huge jump from 21 runs when losing three wickets. This suggests that losing up to 3 wickets in the powerplay may not be a huge problem, but losing 4 (or more) wickets seriously hampers a team’s total. The curve of the graph shows that losing wickets in a short space of time, particularly at the start of the innings, is most damaging to a batting team’s eventual total.

To further look into the spacing of wickets, I also created a variable to show whether a team lost wickets in consecutive overs or lost more than one wicket in the same over at some point in the first 14 overs of the innings (limited to 14 due to the small impact of wickets past the 14th over). A regression using this variable estimated that the impact of losing wickets in consecutive overs or multiple wickets in the same over is a decrease of around 11 runs to a team’s total, on top of the already-calculated impact of losing wickets in general. This is further evidence that it is losing wickets in clusters that can be extremely damaging to a team’s score rather than just losing a wicket in general.

Which Wickets Are Being Lost?

So far we have looked at the impact of losing wickets and the differing impact of losing wickets at different stages of the innings. Something we haven’t explored, though, is which wickets are being lost. For example, losing the 1st wicket or the 5th wicket in the 10th over have very different impacts on your team’s score. Losing the 5th wicket in the 10th over is a sign of trouble, losing the 1st wicket in the 10th over is the most minor of setbacks.

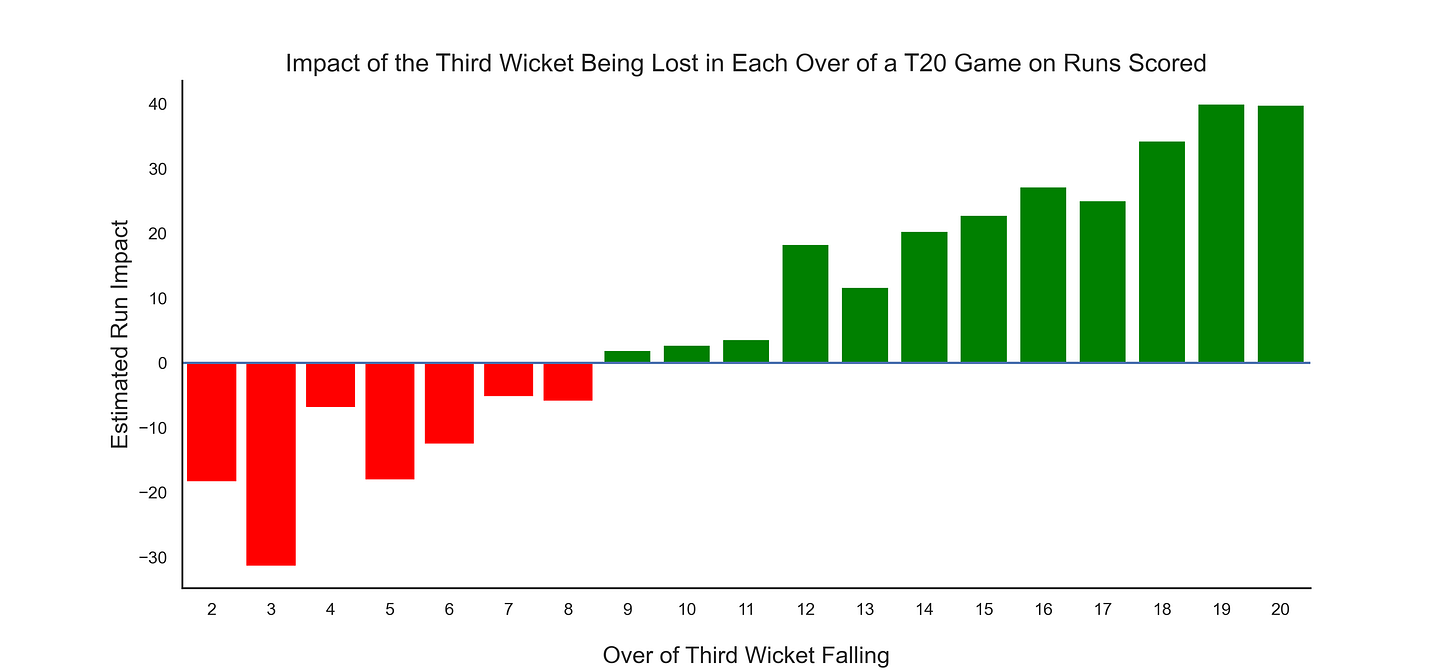

I am not going to bore everyone by looking at every single wicket. But to give a snapshot of how the exact wicket you lose makes a difference, let’s take the third wicket of the batting innings as an example. Almost every innings in our dataset features at least three wickets being lost (just 26 innings saw less than 3 wickets) which makes this a useful wicket to look at. I explored how the timing of the 3rd wicket falling impacts the total runs that a team scores. The graph below shows the impact on total runs for losing the third wicket in each over of a match. (Note: this graph does not show the 1st over because there are too few instances of 3 wickets falling in the first over to make any meaningful insights).

There is some noise which muddies the data a little, but the overall pattern is clear; the later in the innings that you lose your third wicket, the more runs you are expected to score. Note that the green bars showing a positive impact does not mean that losing your third wicket in, say, the 12th over will increase your score. Rather, it’s a marker that losing your third wicket at that stage of the innings means that you are going well and are likely to make a high-scoring total. We could repeat this exercise for every wicket that falls and it would show a similar pattern; the later in the innings that a given wicket falls, the more runs you expect a team to score because the constraint of losing too many wickets has been diminished.

This is all pretty intuitive, but has real implications for batting strategy in T20 cricket. A team losing their 3rd wicket within the first 8 overs (and particularly in the first 6 overs) are likely to have to reduce their risk-taking to ensure that they do not expose the tail too early in the innings. The longer you get into the innings before you lose your 3rd wicket, the less important wicket-preservation becomes. A team reaching the 14th over without losing their third wicket can effectively throw caution to the wind and take bigger risks with more attacking shots. The benefit to be gained by scoring quickly far outweighs any potential negatives of losing a wicket.

The easy conclusion to draw here is that teams should look to preserve wickets upfront before trying to score extremely quickly at the end to ‘catch up.’ This graph seems to show that losing your 3rd wicket later in the innings is a great marker of scoring more runs. But while that might make intuitive sense, it is not the optimal strategy.

Wicket Value and Batting Strategy

Batting teams in T20 cricket have two resources at their disposal: 120 balls and 11 batters. Those 11 batters can effectively be brought down to 7 or 8 batters (those that you would feel comfortable relying on, not bowlers with limited batting ability). When batting, then, teams should look to make use of both the 120 balls and their 7 or 8 batters. By batting cautiously upfront, teams do not make use of those resources.

When starting an innings too cautiously, a team is essentially wasting one of those resources (balls faced) and also not properly utilising the other (the batting lineup). Cautious batting means that balls that could be attacked are being defended, not maximising the payoff from each ball faced. This is particularly a problem in the powerplay, where the fielding restrictions make run scoring easier (at least on paper). Not only are balls being wasted, batting depth is not being utilised properly either. Teams enter games with 7 or 8 batters for a reason: they are (hopefully) good batters. That means teams should feel comfortable with some risk-taking early on because if it goes wrong and wickets are lost, competent batters are still to come.

To the extent that wickets are important to preserve, that is primarily when a batting lineup is in danger of being used up too early. This occurs mostly when wickets are lost in quick succession early in the innings, such as losing four wickets in the powerplay (which we earlier saw has a large impact on run scoring). While wickets might be worth 10+ runs in some overs, that cost does not exist in a vacuum. There is also the opportunity cost associated with batting conservatively which may end up being more than the potential loss that comes with losing a wicket. The opportunity cost in this case is the number of additional runs that a team can score if they attack early on. The 10+ run hit associated with losing a wicket in the powerplay has to be weighed against the amount of runs that can be gained by getting off to a flying start. Those much smarter than I am can figure out a way to approximate the exact value of that tradeoff, but it seems likely that it’s pretty heavily weighted in favour of batting aggressively.

The act of losing wickets should not necessarily make teams act in a risk-averse manner, either. Context and timing are key to deciding on how to bat in reaction to a lost wicket. Resources are there to be used, and losing wickets is a natural part of using those batting resources at a team’s disposal. Where losing wickets does become a problem is when too many are lost too early in the innings, which then carries the risk of running through those resources too quickly. Losing wickets later in the innings, though, should not be a cause for concern. It should be viewed simply as par for the course, with trust placed in the remaining available batters to make use of the remaining balls.

To put it in less abstract terms, imagine two alternative batting strategies, Strategy A and Strategy B. Strategy A focuses on wicket preservation until the death overs, and then looks to bat extremely quickly at the end of the innings with no worry for losing wickets. Strategy B focuses on attacking the powerplay, hoping to maximise early runs and with less concern for losing wickets. The ceiling for runs on Strategy A is likely somewhere around 160-170, as the cautious early batting places a limit on how many runs can be scored regardless of how attacking a team is at the death. Strategy B’s ceiling is likely much higher, perhaps around 220 runs if a player scores a quick 100 and/or all players score quickly. Strategy B does carry more risk, of course, with wickets likely to fall more frequently. But a team with adaptable tactics can switch towards Strategy A if the risk does not pay off and they lose early wickets. At that point, their likely ceiling drops to somewhere around 150-160 if they bat cautiously to preserve wickets until the death. With both Strategy A and B, a total of 150-160 is relatively likely. The difference, however, is in the best-case scenario being far higher with the more aggressive Strategy B.

Conclusion

To sum up, the value of wickets in T20 innings is relatively high at the start of the innings, being worth 12-18 runs in the powerplay. The value of wickets diminishes as the innings goes on, until they are worth almost nothing in the final over. Wickets lost in clusters, particularly early on in the innings, are most damaging to a team’s batting total. While we can assign a run value to a wicket in a given over, the actual impact of a wicket on a team’s strategy is based on which wicket is falling at which point in the innings. Wickets should be viewed as a normal part of building a high scoring T20 innings and should only impact a team’s strategy if they threaten to stop the batting team making use of their balls remaining and batters still to come in. So, teams should optimise for run scoring and react to wickets falling, rather than optimising for few wickets falling.

This takes us back to our original story of Rajasthan retiring Ravi Ashwin. They reacted to losing early wickets (which they lost while trying to score quick early runs) by batting more cautiously, steadying the innings, and then allowed a fast scorer to take advantage of the death overs. A great encapsulation of the fact that wickets do matter, but mainly to the extent that they threaten to stop a team using its resources effectively.

I am far from the first person to attack this topic. For more insights, take a look at work form David Barry, Sport Data Science, and Tom Nielsen, all of whose work I used for inspiration. There is much more work that could be done on this topic, such as incorporating the quality of a batter into the calculation for which wickets are most valuable (i.e. dismissing Jos Buttler might be more valuable than dismissing Kane Williamson in this year’s IPL). There’s also scope to incorporate the risk associated with boundary hitting to more precisely quantify the optimal batting strategy and calculate the opportunity cost associated with losing a wicket and batting cautiously.

To view the code that I used to make this article, see this Google Doc.